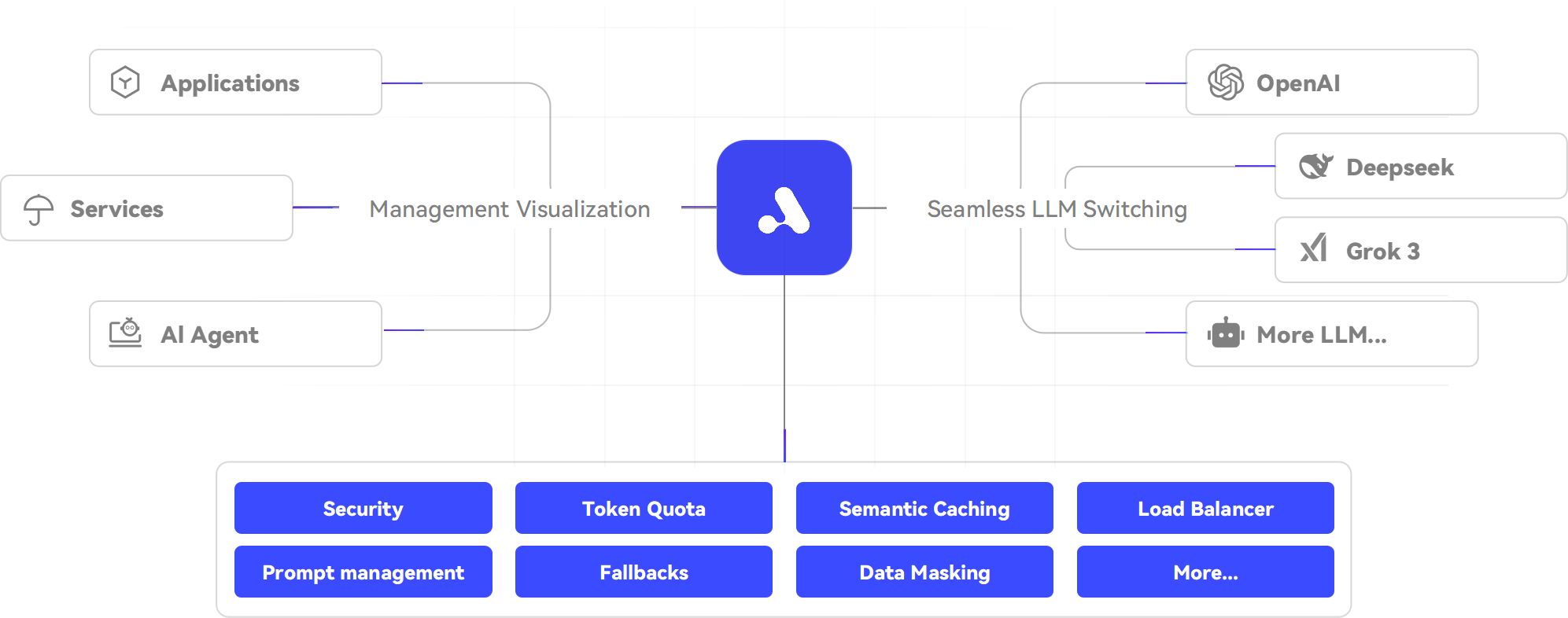

One Platform

Connects 200+ LLMs .

APIPark will continue to add more LLMs. If you can’t find what you need, feel free to submit an issue.

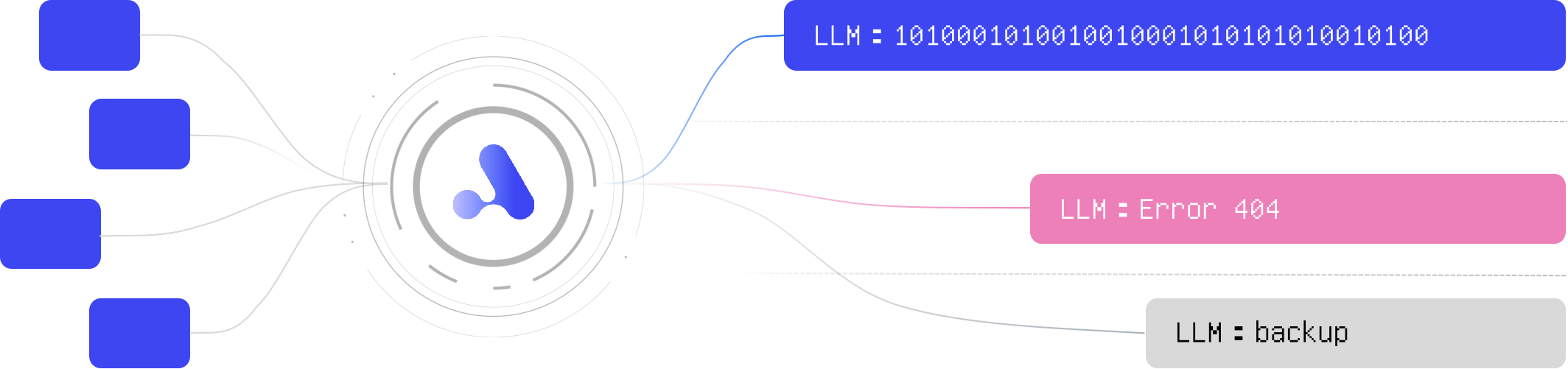

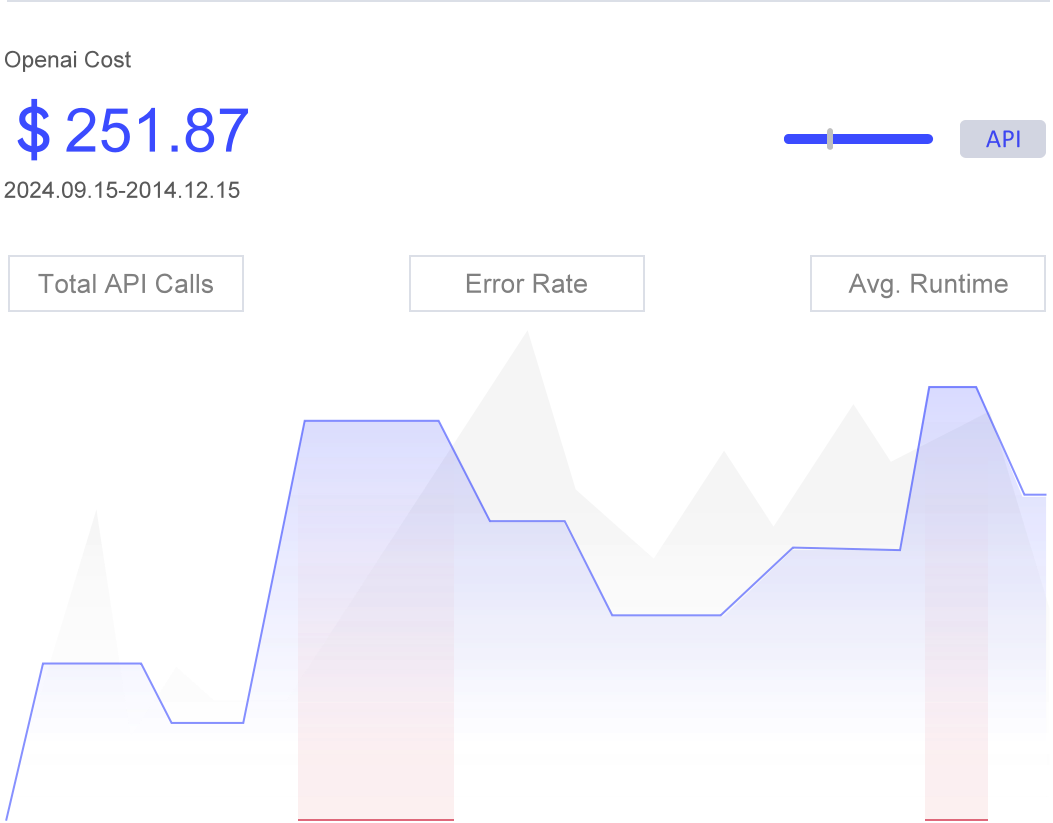

Drive LLMs Efficiently and

Smartly to Production.

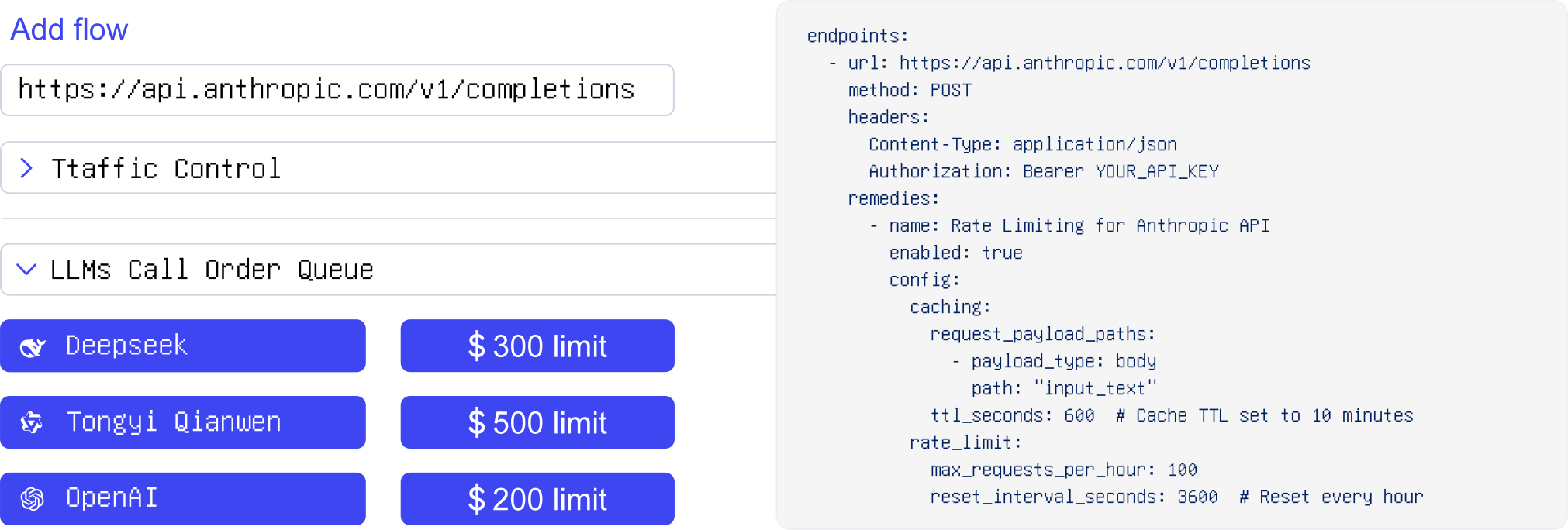

Fine-Grained

Traffic Control for LLMs.

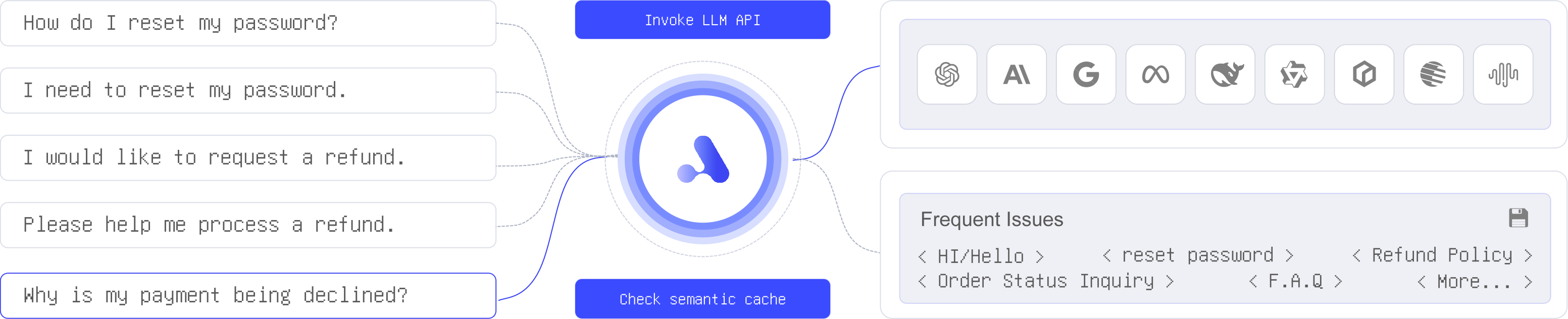

Caching Strategies for AI

in Production.

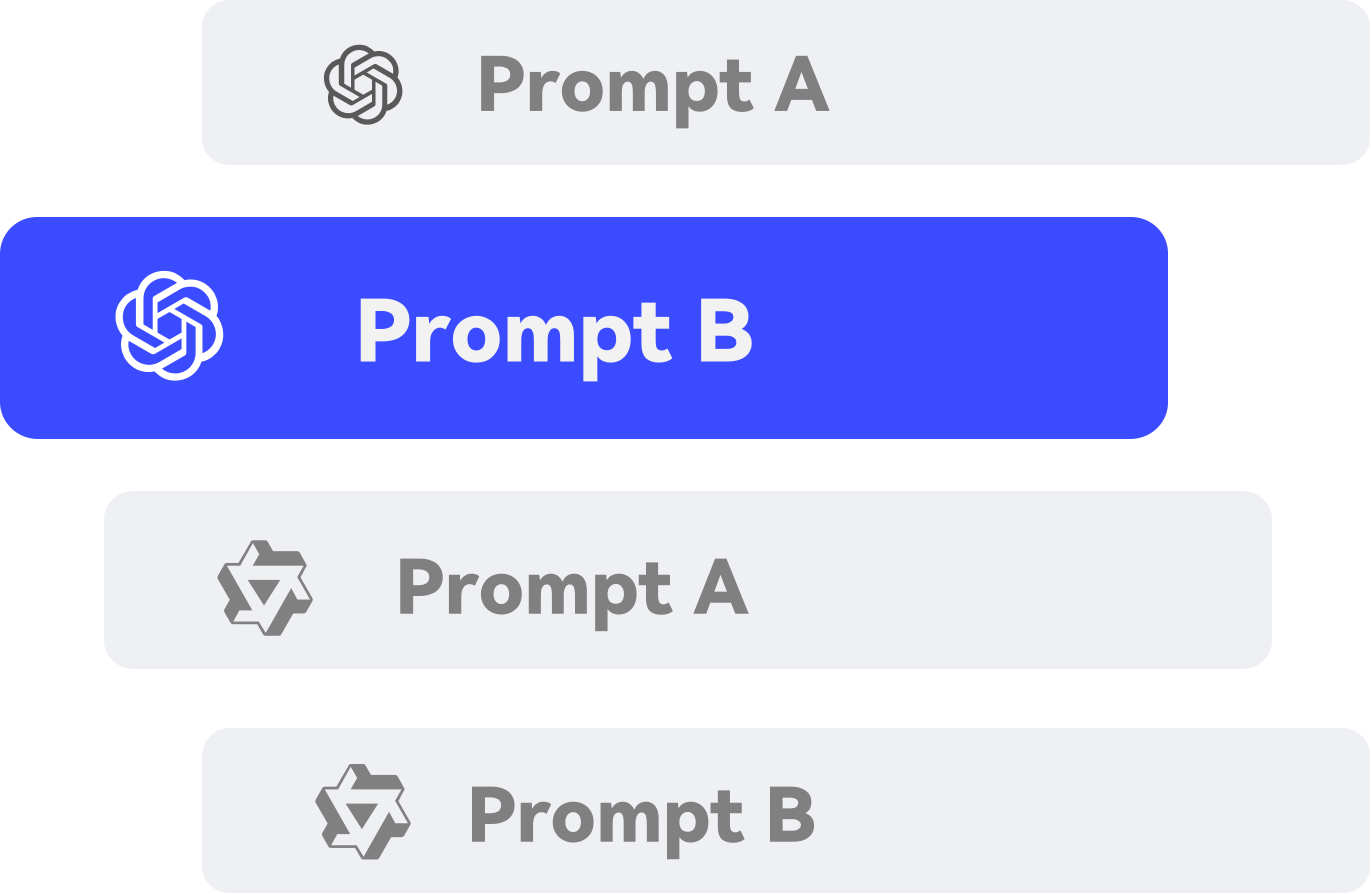

Flexible Prompt Management

in Production.

APIPark x AI Agent

Expanding scenarios for AI applications.

APIPark will continue to add more AI Agents. If you can’t find what you need, feel free to submit an issue.

Just one command line

Deploy your LLM Gateway & Developer Portal in 5 minutes

Why choose APIPark?

APIPark delivers better performance than Kong and Nginx, handling high concurrency and large requests with low latency and high throughput.

Simple APIs, clear documentation, and flexible plugin architecture allow easy customization and quick integration, reducing complexity.

Easily integrates with existing tech stacks and works with popular platforms and tools, eliminating the need for system overhauls.

Built-in API authentication, traffic control, and data encryption features ensure the security and data protection of enterprise business applications.

F.A.Q

Have more question?

Please refer to the following related questions.

An LLM gateway, also known as an AI gateway, is a middleware platform designed to help businesses efficiently manage large language models (LLMs). It significantly streamlines the integration process, enabling organizations to quickly connect to multiple AI models simultaneously. Additionally, the LLM gateway offers comprehensive management features, allowing businesses to refine their oversight of AI models, enhancing both the security and effectiveness of AI utilization.

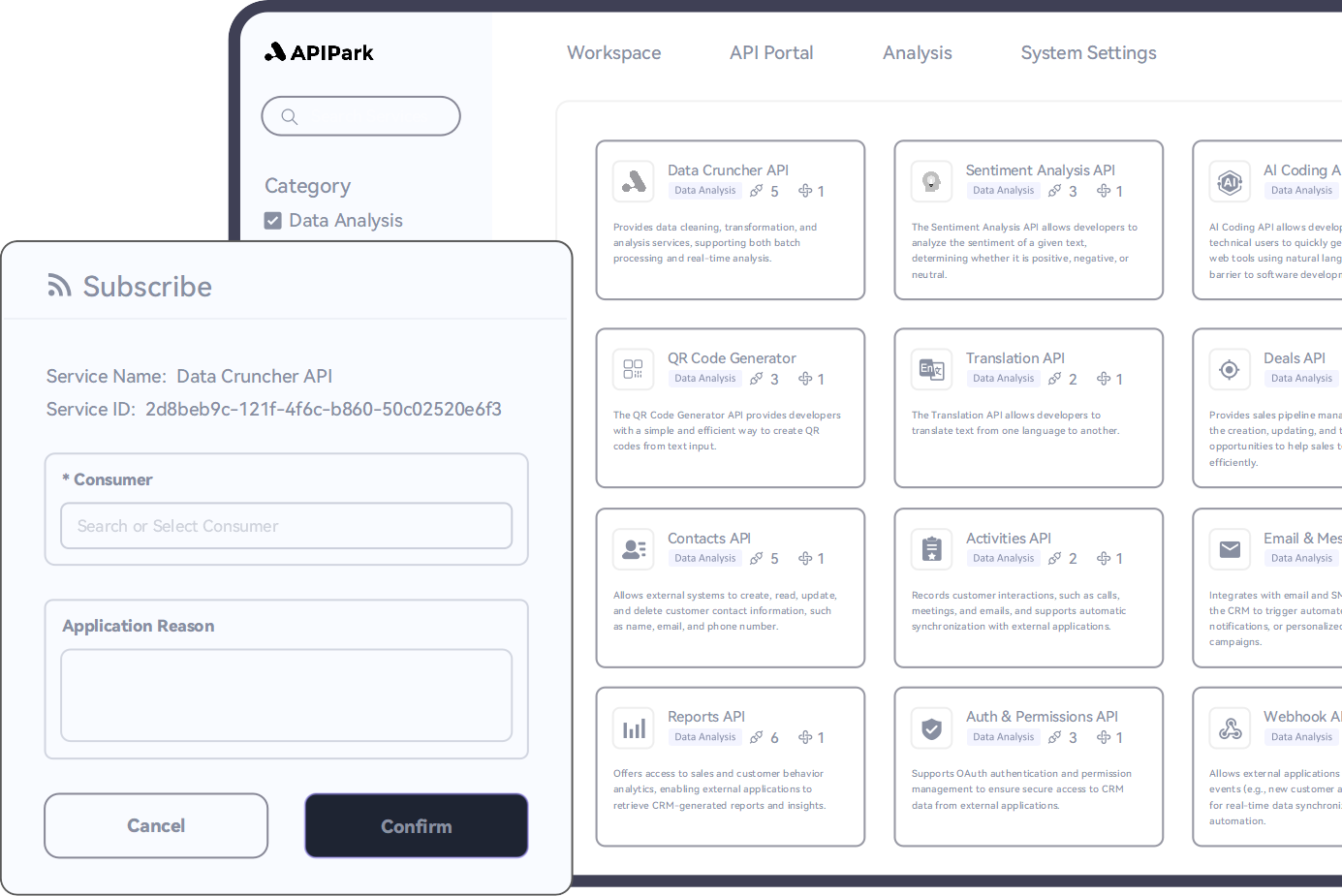

APIPark, as an LLM (Large Language Model) Gateway and API platform, simplifies LLM call management and integrates API services efficiently. It provides fine-grained control over LLM usage, helping reduce costs, improve efficiency, and prevent overuse. APIPark also offers detailed usage analytics to help users monitor and optimize LLM consumption.

As a centralized LLM Gateway and API platform, APIPark supports easy integration and management of both internal and external APIs with strong security and access control. The platform is scalable, allowing users to adjust according to their business needs.

An LLM gateway, also known as an AI gateway, is a middleware platform designed to help businesses efficiently manage large language models (LLMs). It significantly streamlines the integration process, enabling organizations to quickly connect to multiple AI models simultaneously. Additionally, the LLM gateway offers comprehensive management features, allowing businesses to refine their oversight of AI models, enhancing both the security and effectiveness of AI utilization.